Abstract

Artificial intelligence (AI) has emerged as a transformative force in various sectors, including medicine, where it processes high-dimensional data to improve diagnostics and treatment outcomes. This review explores AI applications in urological surgery, highlighting advancements such as image classification and robotic assistance in surgical procedures. AI has demonstrated exceptional diagnostic accuracy, with some systems achieving up to 99.38% in detecting prostate cancer. Additionally, AI facilitates real-time anatomical recognition and instrument delineation, increasing surgical precision. While current robotic systems operate under human supervision, ongoing research aims to advance autonomous surgical capabilities. The future of AI in robotic surgery is promising, especially regarding the possibility of improved outcomes; nonetheless, challenges related to autonomy, safety, and ethics remain.

-

Keywords: Artificial intelligence, Robotics, Urology

HIGHLIGHTS

Artificial intelligence (AI) is transforming surgery, particularly through its integration with robotic systems. While current robots like the da Vinci system operate under human control, advancements in machine learning and deep learning are paving the way for greater autonomy. AI's impact spans surgical field enhancement, native tissue recognition, and automated tasks like suturing. These technologies improve precision, reduce surgical errors, and assist in skill assessment and postoperative predictions. In urology, AI-driven tools like Aquablation and robotic ureteroscopy are leading innovations. However, challenges in autonomy, ethical considerations, and adaptability to unexpected scenarios remain critical for future development.

INTRODUCTION

Artificial intelligence (AI) refers to the intelligence demonstrated by machines or software, rather than human or animal intelligence. It was first recognized as an academic field at the Dartmouth Conference in 1956 [

1]. AI technology is widely used across various industries and scientific fields, with notable examples including web search engines like Google Search, recommendation systems on the internet, voice recognition technology, and self-driving cars. In the medical field, AI is gaining attention for its ability to process and analyze high-dimensional data generated from tests, diagnostics, and electronic records [

2]. The analyzed data is used to diagnose and predict diseases, as well as to improve medical technology, by being trained through various tools.

Recently, various AI applications have been implemented in the medical field. Certain medical tasks, including image classification and object detection, can be carried out by AI programs. AI programs have achieved a diagnostic accuracy of up to 99.38% in detecting pathological prostate cancer [

3]. In tumor radiation therapy, convolutional neural networks (CNNs) have distinguished tumors with an accuracy of 91.9% and a sensitivity of 89.1% [

4]. Additionally, AI has been proven effective in evaluating surgical skills, early cancer detection, and selecting treatment options [

5,

6]. Through AI modeling, doctors can have assistance in detecting positive surgical margins and achieving full automation of specific surgical stages [

7,

8]. AI has also revolutionized the field of surgical education [

9,

10]. It demonstrates the potential to generate and deliver highly specialized feedback during surgery. Robotic surgery, in particular, is one of the most innovative and impactful areas of development. This review provides an overview of the reported role of AI in urological surgeries to date and explores the potential for fully autonomous surgery using AI.

OVERVIEW

AI refers to computer systems and robotic systems capable of performing tasks that require human intelligence or mobility [

11]. ISO/IEC TR 24028:2020 defines AI as “the capability of an engineered system to acquire, process, and apply knowledge and skills.” In medicine, AI can be applied through data analysis to help improve treatment outcomes and patient experiences. This can assist doctors in making more accurate decisions and predicting treatment outcomes with greater certainty.

AI systems require trained computers to solve problems by mimicking human cognition. Machine learning (ML) and deep learning (DL) models are subfields of AI that enable computers to make predictions based on underlying data patterns. Analyzing the interaction between existing data and ML algorithms requires the involvement of medical professionals, as errors may occur without expert intervention [

12]. ML can process multiple data sources and calculate predictions with a level of accuracy that is considered unattainable with traditional statistics, providing clinically valuable feedback [

13]. Naturally, the assistance of AI can reduce surgical errors, thereby enhancing the performance of surgeons. (

Table 1).

With the advancement of AI, there is an increasing number of surgeries being performed using robots in the medical field. Currently, surgical robots are limited to a “master-slave” role, where the robots themselves do not have autonomy without a human operator. However, recent advancements in AI and ML aim to expand the capabilities of surgical robots and improve the surgical experience in the operating room. Surgical robots operate using data captured through sensors and imaging, and this data, collected during operations, can also be a key driving force behind AI innovations in robotic surgery.

However, it is important to distinguish between robot-assisted surgery and autonomous surgery. Automation and autonomy exist on a spectrum, with full autonomy being the most advanced form. Automated machines operate under a certain level of operator control. Their movements are entirely predictable and follow a set procedure. In automated systems, changes in operation are limited to small adjustments based on predetermined parameters under specific conditions. If the variables involved become too large, the automated system cannot adapt and will fail. Autonomy, however, refers to the ability to make large-scale adaptations and changes to the environment without external user input. This is achieved through “planning” tasks and requires the use of tools that can recognize a broader set of data and situations [

14]. The degree of surgical autonomy can be categorized into 6 levels, with levels 4 and 5 being mostly theoretical, as there are no standardized applications for them [

14,

15] (

Table 2).

To successfully apply AI in robotic surgery, it is essential to develop a multifaceted AI platform capable of identifying the patient's anatomical structures while tracking both these structures and the movement of surgical instruments during the procedure. Many studies have laid the groundwork for the development of this technology to date. For example, Nosrati et al. [

16] developed a multimodal approach that can distinguish between instruments, blood, and fat tissue during dissection. They were able to align preoperative data with surgical images during partial nephrectomy. While current applications do not include adaptability, research on these applications is very promising.

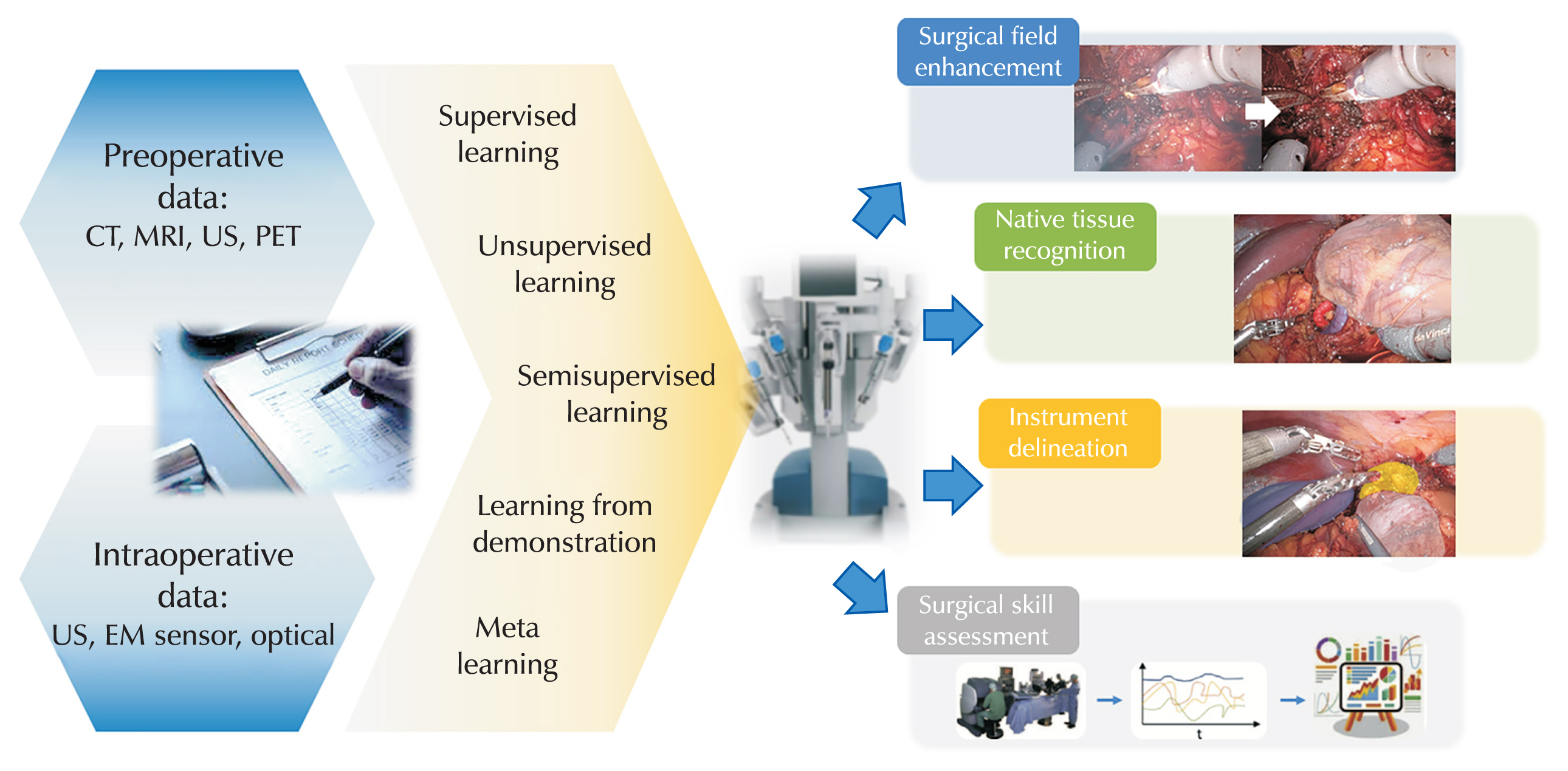

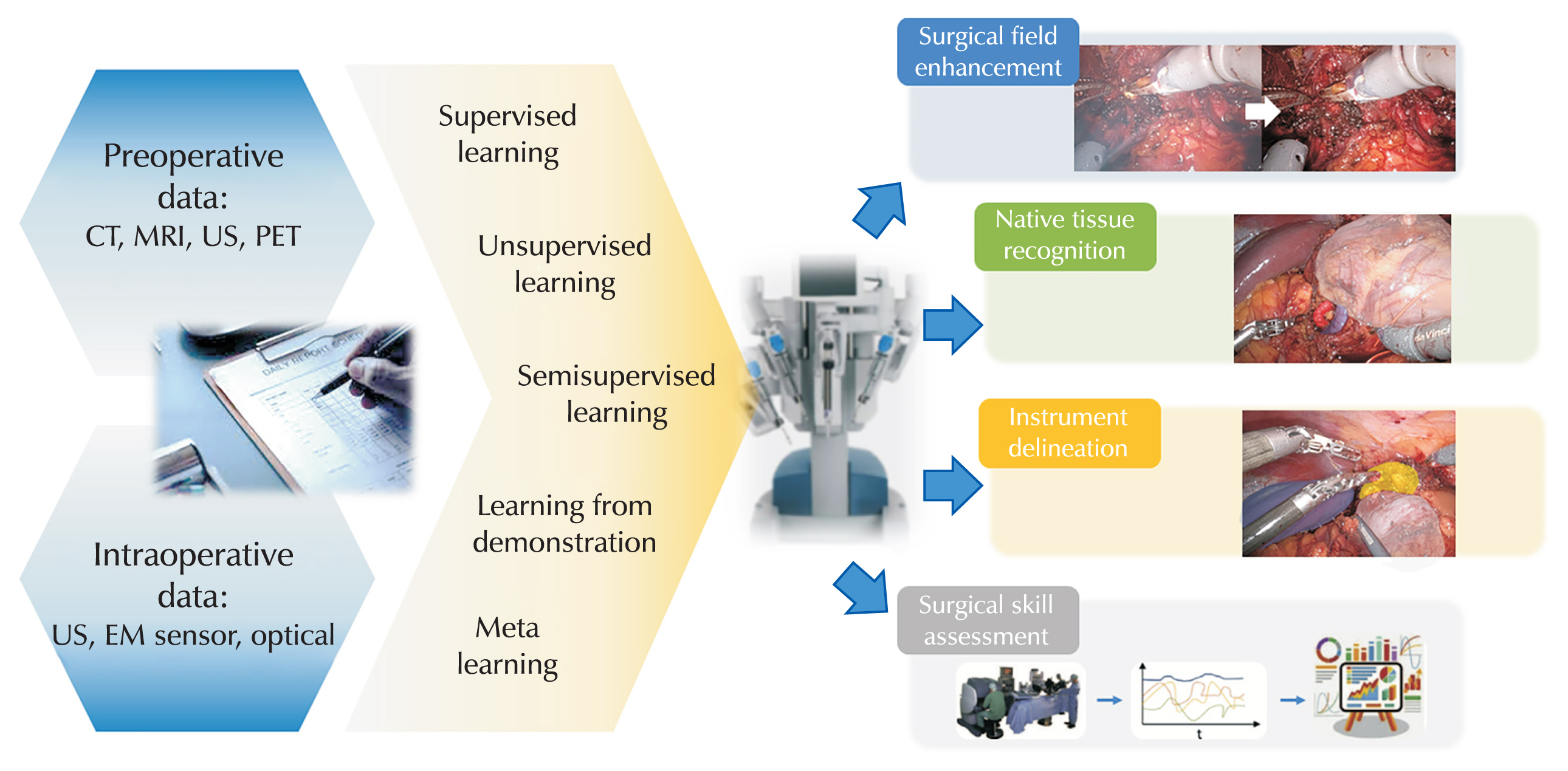

AI IN THE FIELD OF SURGERY

1. Surgical Field Enhancement

Robotic surgery is conducted using cameras and instruments in the anatomical spaces of the abdomen. The identification of anatomical structures during surgery can be enhanced through anatomical structures and real-time AI image synthesis. The surgical field continuously changes as the procedure progresses, prompting research into techniques such as noise reduction, auto-focusing, and color correction of real-time camera footage to improve visualization during surgery. Additionally, the use of electrocautery during robotic surgery can produce smoke that temporarily obscures the view (

Fig. 1). To address this obscureness, a CNN has been proposed to remove smoke signals from the surgical video, resulting in a clearer surgical image [

17].

AI can also be used to provide information about native tissue during surgery. It is essential to identify the “surgical plane,” which is the anatomical plane where tissues meet that is safe to resect during the procedure. Kumazu et al. [

18] developed a DL model that can automatically distinguish surgical resection planes composed of loose connective tissue fibers by training on videos of gastrectomy .

Another important area is the minimization of positive surgical margins to prevent cancer recurrence. Bianchi et al. [

19] utilized preoperative multiparametric magnetic resonance imaging to guide the safe area of intraoperative frozen tissue sampling for confirmation of surgical margins during robot-assisted radical prostatectomy (RARP). By creating an augmented reality 3-dimensional (3D) model and projecting it onto the screen of the robotic console, they were able to identify the optimal locations free of cancer. In this study, the positive surgical margins were significantly lower compared to the standard approach.

In the surgical field, native tissue and surgical instruments coexist, and one of the greatest challenges for autonomous surgery is distinguishing between them. De Backer et al. [

20] proposed a model consisting of a DL network to recognize inorganic items such as surgical instruments during robot-assisted kidney surgery (

Fig. 1). Although a delay of 0.5 seconds was measured, the model achieved high accuracy.

One of the biggest drawbacks of robot-assisted surgery is the lack of tactile sensation. To address this, Doria et al. [

21] attempted to apply tactile feedback to facilitate the identification of anatomical structures. They developed a tactile model specifically for uterine myomas for the first time. They reported that it could enable the differentiation of the softness of uterine myomas in a manner similar to how a physician uses their fingers directly. This can help prevent side effects such as normal tissue injury or bleeding caused by excessive force during surgery. Such technology has been implemented in the recently released da Vinci S (Intuitive Surgical, Inc., Sunnyvale, CA, USA).

Stepwise automation focuses on reducing the workload during surgery by automating specific surgical tasks. The first of these efforts is the development of algorithms for automatic camera positioning in the da Vinci surgical robot system (Intuitive Surgical, Inc.) [

22]. In conventional laparoscopic surgery, the camera’s position was adjusted by a surgical assistant, which could lead to significant differences in visibility depending on the assistant's skill level. However, in robotic surgery, the surgeon must control the camera's position directly, and automating this process can help shorten surgery time and reduce complications. Automation of suturing in surgical robots is also under study. Supervised autonomous robotic soft tissue surgery has been reported since 2016 [

23]. While robotic suturing took more time compared to skilled human surgeons, it demonstrated consistency in factors like stitch spacing and depth [

24]. Such research undoubtedly represents a groundbreaking advancement in the field of AI-enhanced robotic surgery.

Using ML algorithms that integrate the analysis of movements, energy usage, and device utilization during surgery allows for accurate and quantitative assessment of surgical skills [

25,

26]. A key step in ML-based skill evaluation is extracting and quantifying meaningful gestures of surgeon that represent the surgeon’s dexterity. These tools can be used to provide feedback during the learning curve period and to ensure credentialing.

Ershad et al. [

27] analyzed the “style of instrumental movement” of surgeons. The theoretical basis of this approach stems from the idea that experienced surgeons perform surgeries in a more comfortable, harmonious, and efficient manner compared to novice surgeons. They collected kinematic data from 14 surgeons with varying levels of robotic surgery experience, each performing 2 tasks (ring transfer and suturing) 3 times. The advantage of this approach is that it does not require expert knowledge to interpret skill levels (

Fig. 1).

According to a report by Chen et al. [

28], an AI system trained with clinical, pathological, imaging, and genomic data demonstrated superior performance in diagnosis and outcome prediction compared to the D'Amico risk classification based on treatment outcome predictors. However, in addition to patient-related factors, surgeon-related factors are also important as they can impact postoperative outcomes for patients. Hung et al. [

29] evaluated the role of a DL model in predicting postoperative urinary incontinence in a group of 100 patients who underwent RARP. In this study, 3 items related to vesicourethral anastomosis and one item related to apical dissection were identified as the most important factors for achieving urinary continence. This suggests, as noted in previous reports, that surgical technique is more important than patient characteristics for achieving early continence [

30].

AI systems can assist doctors by using augmented reality to provide guidance on anatomical structures during surgery [

31]. When augmented reality images, such as 3D reconstructions of the surgical field, are accurately overlaid on the native anatomical structures, the interpretation of the surgical field can be improved [

32]. However, due to a lack of accuracy in real-world environments, practical applications remain experimental.

AI IN THE FIELD OF UROLOGY

The integration of AI in urological surgery is revolutionizing patient care, offering substantial improvements in precision, efficiency, and clinical outcomes. Robotic systems like the da Vinci surgical robot system are central to modern urology, allowing for minimally invasive procedures with enhanced precision [

33]. AI has further advanced these systems by providing real-time analytics, guiding surgeons during operations, and predicting potential complications such as intraoperative bleeding [

34,

35]. The integration of AI with robotic systems, such as those used for prostatectomies, has enhanced procedural precision, minimized invasiveness, and improved patient outcomes [

35–

37]. AI-powered tools analyze intraoperative data to provide real-time guidance and improve decision-making.

In addition to da Vinci surgical robotic system, several autonomous robotic systems are currently in use in urology. These include newly developed technologies such as Aquablation, an automated robotic system for the surgical treatment of benign prostatic hyperplasia, and the robot-assisted flexible ureteroscope system. Aquablation is an advanced level 4 autonomous device that utilizes a water-jet system to precisely resect prostate tissue [

38]. The surgeon defines the boundaries for resection, and the system autonomously performs the procedure, ensuring accuracy and consistency. Robot-assisted flexible ureteroscopy enhances surgeon convenience by automatically positioning the flexible ureteroscope at the surgical site [

39]. Both technologies represent significant advancements in autonomous robotic systems currently available for clinical use. These innovations highlight urology as a leading field in the adoption and development of autonomous robotic surgical devices.

FUTURE PROSPECTS: AI FOR THE DEVELOPMENT OF AUTONOMOUS ROBOTIC SURGERY

AI systems and ML models are enabling the next generation of surgical robots that can learn various tasks and perform them autonomously under human supervision. Although surgical robot systems have achieved significant success, there is still a long way to go for autonomous systems. These systems currently cannot analyze the complexity of human tissue, nor can they independently handle unexpected events. Nevertheless, the potential applications of autonomous robotic surgery seem feasible for procedures that require precise incisions in confined area.

The initial use of autonomous robots to perform surgical tasks on humans was studied using cadavers [

40]. Cadaver models are ideal for autonomous robotic training because they allow to be performed without issues related to toxicity when using various contrast agents or tracers. The use of tracers or contrast agents in real-time surgery can help identify organs and blood vessels, thereby customizing surgical techniques. However, more time is needed before autonomous robots can be used clinically, especially since performance standards are required to evaluate autonomous robotic surgery. The most critical issues to assess are adaptation to unexpected events, as well as the accuracy and repeatability of surgical actions [

40].

Similar to autonomous vehicles, ethical and safety issues are arising in relation to autonomous robotic surgery [

40]. First, the use of surgical robots is not possible without patient consent. Second, it is crucial to evaluate what and how the robot has learned to prevent inappropriate information storage and ensure patient safety. There is a possibility of issues arising in unexpected situations because the robot system has no predetermined responses for untrained scenarios. Surgical procedures, in particular, are processes where errors must not occur, making it crucial to understand how the robot has learned to handle specific situations [

41]. Bias in learning is especially concerning, as it may lead to biased algorithms. Surgery involves a series of decisions where one choice influences subsequent steps. If the initial incision is not made appropriately, the surgical view that follows will be entirely different from the original plan. Third, robots are less likely to make the best choices in untrained scenarios. When distinguishing between malignant and benign lesions, both humans and ML systems often find it challenging. Human experts tend to exercise caution when dealing with ambiguous lesions. Algorithms should be adjusted to consider the significance of diagnosis, rather than focusing solely on accuracy [

42]. Fourth, ethical issues also arise when using videos for training. Permission must be obtained from the owners of each dataset, and patients must consent to the recording and use of their videos.

CONCLUSIONS

Robotic system can provide an excellent environment for the application of AI in surgery. By using ML approaches, it is possible to evaluate and interpret large amounts of data clinically, offering important feedback regarding the acquisition of surgical skills, the efficiency of surgical planning, and the prediction of postoperative outcomes. However, to ensure widespread implementation, standardization of data collection, interpretation, and validation for a broader range of subjects is necessary.

While autonomous vehicles have come closer to our reality, it is also true that they still face limitations. The journey for autonomous robotic surgery is still long. It is clear that the implementation of AI in robotic surgery is expanding rapidly, and we can expect even more exciting developments in the future. However, many obstacles remain before fully autonomous surgery can be realized, in the field of urology.

NOTES

-

Funding/Support

This study received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

-

Conflict of Interest

The authors have nothing to disclose.

-

Author Contribution

Conceptualization: DYL, HJY; Methodology: HJY; Software, DYL; Validation, HJY; Formal analysis: DYL; Investigation: DYL, HJY; Resources: DYL.; Data Curation: DYL; Visualization: DYL; Supervision: HJY; Project administration: DYL, HYJ; Writing - original draft preparation: DYL, HJY; Writing - review & editing; DYL, HJY.

Fig. 1Applications of artificial intelligence in surgery. CT, computed tomography; MRI, magnetic resonance imaging; US, ultrasound; PET, positron emission tomography; EM sensor, electromagnetic sensor.

Table 1Definitions of terms related to artificial intelligence

|

Terminology |

Definition |

|

Artificial intelligence |

Technologies or fields aimed at implementing human abilities such as cognition, reasoning, and judgment in computers. |

|

Algorithm |

A set of instructions to follow for calculations or other tasks |

|

Artificial neural network |

An algorithm modeled after the neural networks of living organisms, particularly the visual and auditory cortices of humans.

Artificial neural networks are based on a collection of interconnected units or nodes called artificial neurons. |

|

Computer vision |

A field that processes visual data using computers. It is a branch of artificial intelligence that enables computers and systems to derive meaningful information from digital images, videos, and other visual inputs, allowing them to take actions or make recommendations based on that information. |

|

Convolutional neural network |

The most commonly used artificial neural network for analyzing visual images is a deep neural network technique that applies filtering methods to artificial neural networks, allowing for efficient image processing. |

|

Deep learning |

A type of machine learning based on artificial neural networks. It is a branch of machine learning that teaches computers to think like humans, using multiple layers of processing to extract increasingly higher levels of features from the data. |

|

Machine learning |

The process of semiautomatically extracting knowledge and insights from data.

This field has evolved from the study of pattern recognition and computer learning theories. It has been developed in the fields of statistics, computer science, and artificial intelligence, enabling the training of algorithms that can quickly discover and identify more complex patterns and relationships compared to traditional statistical models that focus on a limited number of patient variables. |

|

Natural language processing |

Technologies for processing natural language, the language used by humans, on computers. This field of artificial intelligence is concerned with enabling computers to understand text and speech in a manner similar to how humans do. |

Table 2

|

Level |

Description |

Examples |

|

0 |

No autonomy |

A robot that mimics human movements |

|

1 |

Robot assistance |

Da Vinci surgical system |

|

2 |

Task autonomy |

Automatic placement of seeds for prostate brachytherapy |

|

3 |

Conditional autonomy |

Prostate Aquablation using AquaBeam |

|

4 |

High autonomy |

Robots planning and performing surgery under human supervision |

|

5 |

Full autonomy |

Robots independently planning and performing surgery without human intervention |

REFERENCES

- 1. Moor J. The Dartmouth College artificial intelligence conference: the next fifty years. AI Mag 2006;27:87-91.

- 2. Holmes J, Sacchi L, Bellazzi R. Artificial intelligence in medicine. Ann R Coll Surg Engl 2004;86:334-8.ArticlePubMedPMC

- 3. Zhang C, Zhang Q, Gao X, Liu P, Guo H. High accuracy and effectiveness with deep neural networks and artificial intelligence in pathological diagnosis of prostate cancer: initial results. 33rd European Association of Urology Annual Conference; 2018 Mar 16–20; Copenhagen, Denmark. 2018.

- 4. Kamnitsas K, Ledig C, Newcombe VFJ, Simpson JP, Kane AD, Menon DK, et al. Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med Image Anal 2017;36:61-78.ArticlePubMed

- 5. Hunter B, Hindocha S, Lee RW. The role of artificial intelligence in early cancer diagnosis. Cancers 2022;14:1524.ArticlePubMedPMC

- 6. Andras I, Mazzone E, van Leeuwen FW, De Naeyer G, van Oosterom MN, Beato S, et al. Artificial intelligence and robotics: a combination that is changing the operating room. World J Urol 2020;38:2359-66.ArticlePubMedPDF

- 7. Pradipta AR, Tanei T, Morimoto K, Shimazu K, Noguchi S, Tanaka K. Emerging technologies for real-time intraoperative margin assessment in future breast-conserving surgery. Adv Sci 2020;7:1901519.ArticlePubMedPMCPDF

- 8. Gumbs AA, Frigerio I, Spolverato G, Croner R, Illanes A, Chouillard E, et al. Artificial intelligence surgery: how do we get to autonomous actions in surgery? Sensors 2021;21:5526.ArticlePubMedPMC

- 9. Sheikh AY, Fann JI. Artificial intelligence: can information be transformed into intelligence in surgical education? Thorac Surg Clin 2019;29:339-50.PubMed

- 10. Mari V, Spolverato G, Ferrari L. The potential of artificial intelligence as an equalizer of gender disparity in surgical training and education. Artif Intell Surg 2022;2:122-31.Article

- 11. Wang P. On defining artificial intelligence. J Artif Gen Intell 2019;10:1-37.Article

- 12. Wang D, Khosla A, Gargeya R, Irshad H, Beck A. Deep learning for identifying metastatic breast cancer. arXiv:1606. 05718v1 [Preprint]. 2016 [cited 2024 Apr 19]. Available from: https://doi.org/10.48550/arXiv.1606.05718

- 13. Hashimoto DA, Rosman G, Rus D, Meireles OR. Artificial intelligence in surgery: promises and perils. Ann Surg 2018;268:70-6.PubMed

- 14. Attanasio A, Scaglioni B, De Momi E, Fiorini P, Valdastri P. Autonomy in surgical robotics. Annu Rev Control Robot Auton Syst 2021;4:651-79.Article

- 15. Connor MJ, Dasgupta P, Ahmed HU, Raza A. Autonomous surgery in the era of robotic urology: friend or foe of the future surgeon? Nat Rev Urol 2020;17:643-9.ArticlePubMedPDF

- 16. Nosrati MS, Amir-Khalili A, Peyrat JM, Abinahed J, Al-Alao O, Al-Ansari A, et al. Endoscopic scene labelling and augmentation using intraoperative pulsatile motion and colour appearance cues with preoperative anatomical priors. Int J Comput Assist Radiol Surg 2016;11:1409-18.ArticlePubMedPDF

- 17. Wang F, Sun X, Li J. Surgical smoke removal via residual Swin transformer network. Int J Comput Assist Radiol Surg 2023;18:1417-27.ArticlePubMedPDF

- 18. Kumazu Y, Kobayashi N, Kitamura N, Rayan E, Neculoiu P, Misumi T, et al. Automated segmentation by deep learning of loose connective tissue fibers to define safe dissection planes in robot-assisted gastrectomy. Sci Rep 2021;11:21198.ArticlePubMedPMCPDF

- 19. Bianchi L, Chessa F, Angiolini A, Cercenelli L, Lodi S, Bortolani B, et al. The use of augmented reality to guide the intraoperative frozen section during robot-assisted radical prostatectomy. Eur Urol 2021;80:480-8.ArticlePubMed

- 20. De Backer P, Van Praet C, Simoens J, Peraire Lores M, Creemers H, Mestdagh K, et al. Improving augmented reality through deep learning: real-time instrument delineation in robotic renal surgery. Eur Urol 2023;84:86-91.ArticlePubMed

- 21. Doria D, Fani S, Giannini A, Simoncini T, Bianchi M. Enhancing the localization of uterine leiomyomas through cutaneous softness rendering for robot-assisted surgical palpation applications. IEEE Trans Haptics 2021;14:503-12.ArticlePubMed

- 22. Eslamian S, Reisner LA, Pandya AK. Development and evaluation of an autonomous camera control algorithm on the da Vinci Surgical System. Int J Med Robot 2020;16:e2036.ArticlePubMedPDF

- 23. Shademan A, Decker RS, Opfermann JD, Leonard S, Krieger A, Kim PC. Supervised autonomous robotic soft tissue surgery. Sci Transl Med 2016;8:337ra64.ArticlePubMed

- 24. Saeidi H, Opfermann JD, Kam M, Wei S, Leonard S, Hsieh MH, et al. Autonomous robotic laparoscopic surgery for intestinal anastomosis. Sci Robot 2022;7:eabj2908.ArticlePubMedPMC

- 25. Wang Z, Majewicz Fey A. Deep learning with convolutional neural network for objective skill evaluation in robot-assisted surgery. Int J Comput Assist Radiol Surg 2018;13:1959-70.ArticlePubMedPDF

- 26. Fard MJ, Ameri S, Darin Ellis R, Chinnam RB, Pandya AK, Klein MD. Automated robot-assisted surgical skill evaluation: predictive analytics approach. Int J Med Robot 2018 Feb;14(1):[Epub].ArticlePDF

- 27. Ershad M, Rege R, Majewicz Fey A. Automatic and near real-time stylistic behavior assessment in robotic surgery. Int J Comput Assist Radiol Surg 2019;14:635-43.ArticlePubMedPDF

- 28. Chen J, Remulla D, Nguyen JH, Dua A, Liu Y, Dasgupta P, et al. Current status of artificial intelligence applications in urology and their potential to influence clinical practice. BJU Int 2019;124:567-77.ArticlePubMedPDF

- 29. Hung AJ, Chen J, Ghodoussipour S, Oh PJ, Liu Z, Nguyen J, et al. A deep-learning model using automated performance metrics and clinical features to predict urinary continence recovery after robot-assisted radical prostatectomy. BJU Int 2019;124:487-95.ArticlePubMedPMCPDF

- 30. Goldenberg MG, Goldenberg L, Grantcharov TP. Surgeon performance predicts early continence after robot-assisted radical prostatectomy. J Endourol 2017;31:858-63.ArticlePubMed

- 31. Navaratnam A, Abdul-Muhsin H, Humphreys M. Updates in urologic robot assisted surgery. F1000Res 2018;7:F1000 Faculty Rev-1948.ArticlePubMedPMCPDF

- 32. Kong SH, Haouchine N, Soares R, Klymchenko A, Andreiuk B, Marques B, et al. Robust augmented reality registration method for localization of solid organs' tumors using CT-derived virtual biomechanical model and fluorescent fiducials. Surg Endosc 2017;31:2863-71.ArticlePubMedPDF

- 33. Bellos T, Manolitsis I, Katsimperis S, Juliebø-Jones P, Feretzakis G, Mitsogiannis I, et al. Artificial intelligence in urologic robotic oncologic surgery: a narrative review. Cancers (Basel) 2024;16:1775.ArticlePubMedPMC

- 34. Piana A, Gallioli A, Amparore D, Diana P, Territo A, Campi R, et al. Three-dimensional augmented reality–guided robotic-assisted kidney transplantation: breaking the limit of atheromatic plaques. Eur Urol 2022;82:419-26.ArticlePubMed

- 35. Checcucci E, Piazzolla P, Marullo G, Innocente C, Salerno F, Ulrich L, et al. Development of bleeding artificial intelligence detector (BLAIR) system for robotic radical prostatectomy. J Clin Med 2023;12:7355.ArticlePubMedPMC

- 36. Checcucci E, Pecoraro A, Amparore D, De Cillis S, Granato S, Volpi G, et al. The impact of 3D models on positive surgical margins after robot-assisted radical prostatectomy. World J Urol 2022;40:2221-9.ArticlePubMedPDF

- 37. Porpiglia F, Checcucci E, Amparore D, Manfredi M, Massa F, Piazzolla P, et al. Three-dimensional elastic augmented-reality robot-assisted radical prostatectomy using hyperaccuracy three-dimensional reconstruction technology: a step further in the identification of capsular involvement. Eur Urol 2019;76:505-14.ArticlePubMed

- 38. Roehrborn CG, Teplitsky S, Das AK. Aquablation of the prostate: a review and update. Can J Urol 2019;26:20-4.PubMed

- 39. Klein J, Charalampogiannis N, Fiedler M, Wakileh G, Gözen A, Rassweiler J. Analysis of performance factors in 240 consecutive cases of robot-assisted flexible ureteroscopic stone treatment. J Robot Surg 2021;15:265-74.ArticlePubMedPDF

- 40. O’Sullivan S, Leonard S, Holzinger A, Allen C, Battaglia F, Nevejans N, et al. Anatomy 101 for AI-driven robotics: explanatory, ethical and legal frameworks for development of cadaveric skills training standards in autonomous robotic surgery/ autopsy. Int J Med Robot 2019;30:e2020.

- 41. O'Sullivan S, Leonard S, Holzinger A, Allen C, Battaglia F, Nevejans N, et al. Operational framework and training standard requirements for AI-empowered robotic surgery. Int J Med Robot 2020;16:1-13.ArticlePubMedPMCPDF

- 42. Taher H, Grasso V, Tawfik S, Gumbs A. The challenges of deep learning in artificial intelligence and autonomous actions in surgery: a literature review. Artif Intell Surg 2022;2:144-58.Article

, Hee Jo Yang

, Hee Jo Yang

KAUTII

KAUTII

ePub Link

ePub Link Cite

Cite